This is part 2 in my Storage Spaces series. I will look into setting Storage Spaces (NOT Storage Spaces Direct (S2D)) up in Server 2025, and check how parity performance is now. This series have nothing to do with Home Assistant, except providing hosting capabilities for the Home Assistant appliance.

In this part, I will take Storage Spaces in Server 2025 for a spin before setting it up for my own use in part 3. To be honest, I don’t have the time to try all combinations, in something that is this poorly documented. Microsoft have done a terrible job documenting Storage Spaces. Old documentation is mixed with new, and you can’t see what applies to which version of Windows Server. Lots of links point to Storage Spaces Direct documentation, which is a completely different beast, and some information can only be found on blog posts. To proof my point, there is only 4 topics under the Storage Spaces documentation, while there is more than 30 topics under the Storage Spaces Direct documentation.

This leads to a lot of confusion online, and it’s a nightmare to search for info on Storage Spaces, because most information is about Storage Spaces Direct.

A simple question, is tiered storage like mirror-accelerated parity official supported by Microsoft in Storage Spaces when you don’t deploy storage bus cache? I can’t find the answer in the Microsoft documentation, and that is simply not good enough. You can easily build it via PowerShell, but if it isn’t a supported configuration, I won’t use it. even though this is a home server / home lab, I don’t want a Windows Update to take out my storage. In the Server Manager GUI you can choose tiered storage, but it only allow you to choose between simple and mirror resilience, so mirrored tiered storage must be official supported, but I am looking for a parity setup to improve storage efficiency.

Another important thing that is undocumented, is which SSD / NVMe drives are supported. Microsoft made a blog post about not using consumer grade SSDs for S2D which I am sure also applies to Storage Spaces. But the huge problem here is, as you can read in the comments on the blog post, that some enterprise drives that should work, is not recognized as devices with power-loss protection, and that means Storage Spaces will only send synchronous writes to the devices, and not use the internal buffer in the device, and that will degrade performance a lot on most drives. I have not been able to find a positive list of supported devices with power-loss protection, so I could gamble and buy enterprise grade NVMe drives, and hope they are recognized correct, or I could buy a device that don’t use an internal buffer to reach high write performance. I did the last, and bought 2 Intel Optane 905p drives at 960GB, since these drives can reach write speeds of 2200 MB/s all the time, without any help from cache or buffers, and there fantastic performance at low QD and superb endurance rating makes them perfect for cache drives in my setup.

But enough complaining for now, let’s see what happens when you configure Storage Spaces via the Server Manager GUI.

I have 8 HDD and 2 NVMe, when I make 1 storage pool with all drives, and make 1 virtual disk with dual parity, I get no options to select cache drives. I can see the GUI choose 6 columns, which is a good call based on the number of HDDs, and a default interleave of 256KB. Then I format with Refs 4KB, and run some test, and check the performance counters to see what is happening. To my surprise it really looks like the NVMe disks are used like normal member parity disks in this configuration. What a waste of flash storage, and a pile of questions arrives, but I won’t investigate, since I would never run a configuration like that.

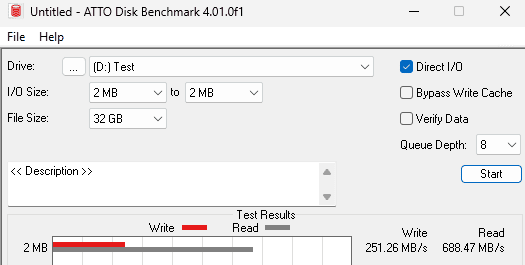

I delete the storage pool, and create a storage pool with only HDDs. This will allow me to check out Storage Spaces parity performance in Server 2025 without any cache drives. I created everything via the GUI in Server Manager again, and it choose 6 columns and 256KB interleave again. I then tested performance with ATTO disk benchmark, with Direct I/O and a QD of 8, since I have 8 drives in the pool, so it seems fair. The performance is for sure higher with higher QD, but you properly won’t see that in a home server scenario.

I will just share the result for I/O size 2MB and file size 32GB, since it gives a clear picture of the sequel performance without caching. Write performance is about 250MB/s and read performance is about 700MB/s, that is pretty decent. Write performance equal to 1 HDD is quite normal for small parity pools in storage systems without cache or other improvements.

Storage efficiency is 66% because we use 6 columns and have dual parity. I could increase efficiency to 71% by going with 7 columns, but with 8 drives it would mean I could only remove 1 drive from the pool at a time. It could be enough, and I think it’s a personal preference weather you want extra security or more free space. I think Microsoft did it right by choosing columns with the formula: drives – parity drives = number of columns, since most would expect to be able to remove 2 drives from a dual parity pool.

I have read blogs stating that performance of Storage Spaces parity is way better if you align interleave, columns, and unit allocation size. I had to test this of course, but right away there is an issue, I can’t create a virtual disk with an interleave of less than 64KB. With 6 columns, where 2 are parity, that means my full stripe is 64KB x 4 = 256KB. I can format the disk using NTFS 256KB unit allocation size, but it doesn’t change anything in regards to performance. I tried a lot of different combinations to see if I could find a golden moment, where performance would increase drastically, but I couldn’t find it. Using 64KB interleave gave me less sequel performance, so you should leave this at the default 256KB unless you have an IO heavy workload, like a SQL database.

Microsoft have made several claims to have increased parity performance in the past, and I guess they actually did it. I think they solved the hole alignment problem, so the performance is decent without having the perfect interleave, column, unit allocation size setup, and that is GREAT!

The above is great if you have 8 HDDs and want to use them in dual parity, and get decent performance without being an IT wizard, because you can use the Server Manager GUI to setup your storage, just make sure you don’t include SSDs in the storage pool, and you should be good to go.

All this is just background information that I use for setting up my own storage in the next part, where I will configure mirror-accelerated parity in a way that I know is supported in Storage Spaces.

In this article I always refer to and use dual parity instead of single parity, why? When you use large drives (1TB+) in storage systems, NEVER use single parity / RAID5, do a google search if you want to know why or look at a RAID Reliability Calculator.

I use WD Gold enterprise hard drives, and that might have a positive effect on performance, they have OptiNAND, but the drives don’t have ArmorCache, which could potently have a huge positive impact on write performance in Storage Spaces, since ArmorCache in theory should allow synchronous writes at the speed of async writes.

In the next part I will introduce my Intel Optane drives to the mix, and that will change performance characteristics completely.